Toward the end of the 20th century, the standard cosmological model seemed complete. Full of mysteries, yes. Brimming with fertile areas for further research, definitely. But on the whole it held together: the universe consisted of approximately two-thirds dark energy (a mysterious something that is accelerating the expansion of the universe), maybe a quarter dark matter (a mysterious something that determines the evolution of structure in the universe), and 4 or 5 percent “ordinary” matter (the stuff of us—and of planets, stars, galaxies and everything else we had always thought, until the past few decades, constituted the universe in its entirety). It added up.

Not so fast. Or, more accurately, too fast.

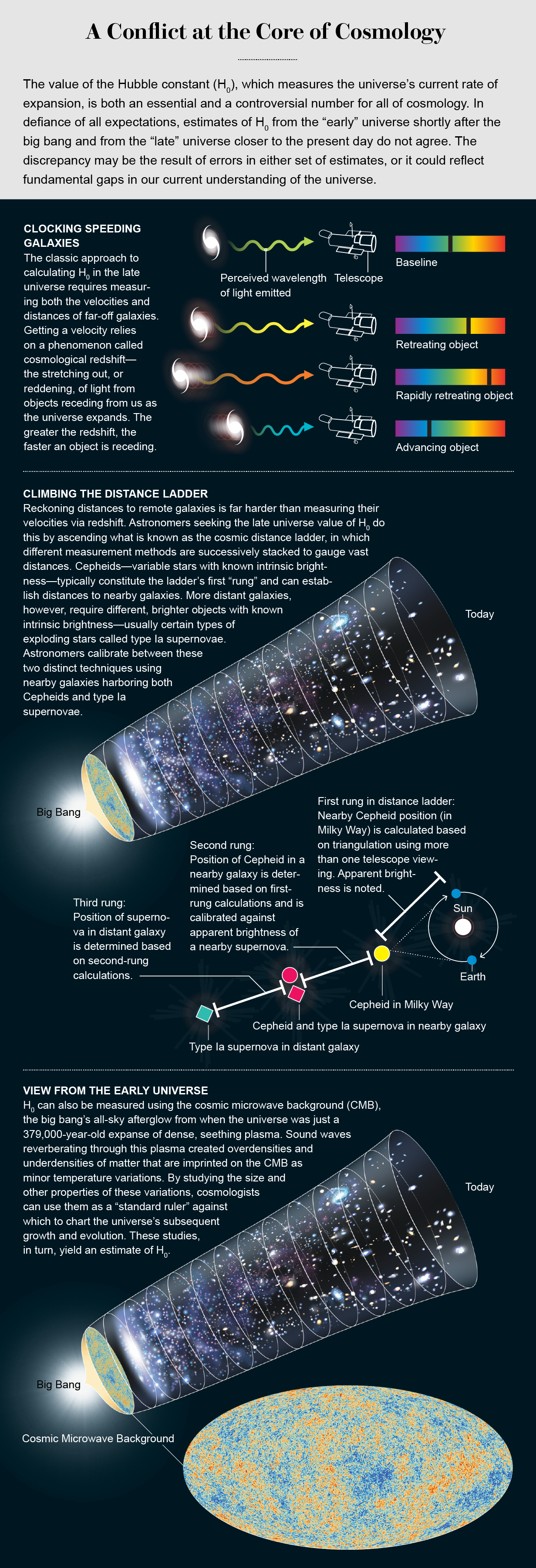

In recent years a discrepancy has emerged between two ways of measuring the rate of the universe’s expansion, a value called the Hubble constant (H0). Measurements beginning in today’s universe and working backward to earlier and earlier stages have consistently revealed one value for H0. Measurements beginning at the earliest stages of the universe and working forward, however, have consistently predicted another value—one that suggests the universe is expanding faster than we had thought.

The discrepancy is mathematically subtle but—as subtle mathematical discrepancies magnified to the spacetime scale of the universe often are—cosmically significant. Knowing the current expansion rate of the universe helps cosmologists extrapolate backward in time to determine the age of the universe. It also allows them to extrapolate forward in time to figure out when, according to current theory, the space between galaxies will have grown so vast that the cosmos will look like an empty expanse beyond our own immediate surroundings. A correct value of H0 might even help elucidate the nature of the dark energy driving the acceleration.

So far measurements of the early universe looking forward predict one value for H0, and measurements from the recent universe looking backward reveal another. This sort of situation is not rare in science. Usually it disappears under closer scrutiny—and the assumption that it would disappear has reassured cosmologists for the past decade. But the disagreement has, if anything, hardened year after year, each set of measurements growing more and more intractable. And now a consensus on the problem has emerged.

Nobody is suggesting that the entire standard cosmological model is wrong. But something is wrong—maybe with the observations or maybe with the interpretation of the observations, although each scenario is unlikely. This leaves one last option—equally unlikely but also less and less unthinkable: something is wrong with the cosmological model itself.

For most of human history the “study” of our cosmic origins was a matter of myth—variations on the theme of “in the beginning.” In 1925 American astronomer Edwin Hubble edged it toward empiricism when he announced that he had solved a centuries-long mystery about the identity of smudges in the heavens—what astronomers called “nebulae.” Were nebulae gaseous formations that resided in the canopy of stars? If so, then maybe that canopy of stars, stretching as far as the most powerful telescopes could see, was the universe in its entirety. Or were nebulae “island universes” all their own? At least one nebula is, Hubble discovered: what we today call the Andromeda galaxy.

Furthermore, when Hubble looked at the light from other nebulae, he found that the wavelengths had stretched toward the red end of the visible spectrum, suggesting that each source was moving away from Earth. (The speed of light remains constant. What changes is the length between waves, and that length determines color.) In 1927 Belgian physicist and priest Georges Lemaître noticed a pattern: The more distant the galaxy, the greater its redshift. The farther away it was, the faster it receded. In 1929 Hubble independently reached the same conclusion: the universe is expanding.

Expanding from what? Reverse the outward expansion of the universe, and you eventually wind up at a starting point, a birth event of sorts. Almost immediately a few theorists suggested a kind of explosion of space and time, a phenomenon that later acquired the (initially derogatory) moniker “big bang.” The idea sounded fantastical, and for several decades, in the absence of empirical evidence, most astronomers could afford to ignore it. That changed in 1965, when two papers were published simultaneously in the Astrophysical Journal. The first, by four Princeton University physicists, predicted the current temperature of a universe that had emerged out of a primordial fireball. The second, by two Bell Labs astronomers, reported the measurement of that temperature.

The Bell Labs radio antenna recorded a layer of radiation from every direction in the sky—something that came to be known as the cosmic microwave background (CMB). The temperature the scientists derived from it of three degrees above absolute zero did not exactly match the Princeton collaboration’s prediction, but for a first try, it was close enough to quickly bring about a consensus on the big bang interpretation. In 1970 one-time Hubble protégé Allan R. Sandage published a highly influential essay in Physics Today that in effect established the new science’s research program for decades to come: “Cosmology: A Search for Two Numbers.” One number, Sandage said, was the current rate of the expansion of the universe—the Hubble constant. The second number was the rate at which that expansion was slowing down—the deceleration parameter.

Scientists settled on a value for the second number first. Beginning in the late 1980s, two teams of scientists set out to measure the deceleration by working with a common assumption and a common tool. The assumption was that in an expanding universe full of matter interacting gravitationally with all other matter—everything tugging on everything else—the expansion must be slowing. The tool was type Ia supernovae, exploding stars that astronomers believed could serve as standard candles—sources of light that do not vary from one example to another and whose brightness tells you its relative distance. (A 60-watt light bulb will appear dimmer and dimmer as you move farther away from it, but if you know it is a 60-watt bulb, you can deduce its separation from you.) If expansion is slowing, the astronomers assumed, at some great length away from Earth a supernova would be closer, and therefore brighter, than if the universe were growing at a constant rate.

What both teams independently discovered, however, was that the most distant supernovae were dimmer than expected and therefore farther away. In 1998 they announced their conclusion: The expansion of the universe is not slowing down. It is speeding up. The cause of this acceleration came to be known as “dark energy”—a name to be used as a placeholder until someone figures out what it actually is.

A value for Sandage’s first number—the Hubble constant—soon followed. For several decades the number had been a source of contention among astronomers. Sandage himself had claimed H0 would be around 50 (the expansion rate expressed in kilometers per second per 3.26 million light-years), a value that would put the age of the universe at about 20 billion years. Other astronomers favored an H0 near 100, or an age of roughly 10 billion years. The discrepancy was embarrassing: even a brand-new science should be able to constrain a fundamental number within a factor of two.

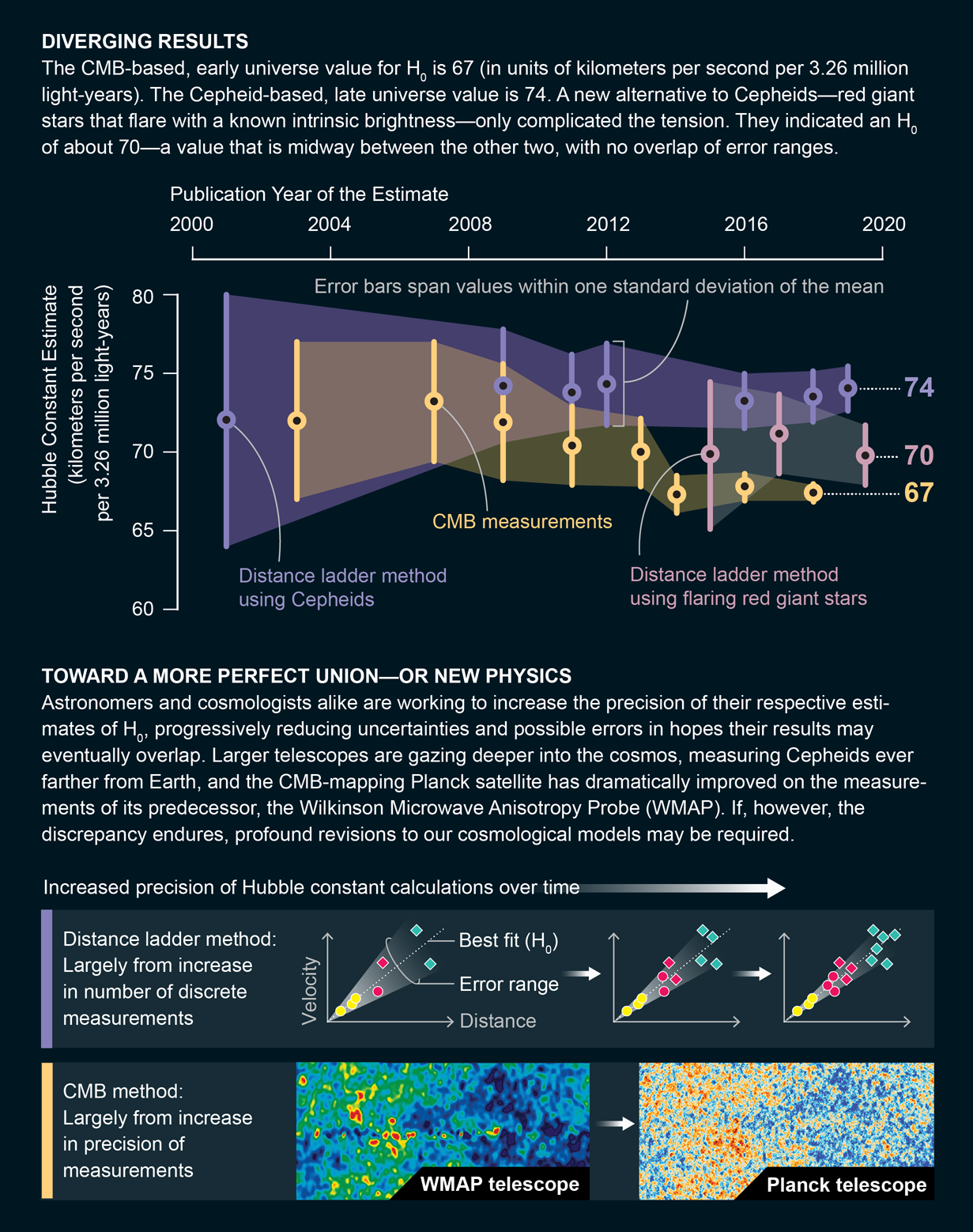

In 2001 the Hubble Space Telescope Key Project completed the first reliable measurement of the Hubble constant. In this case, the standard candles were Cepheid variables, stars that brighten and dim with a regularity that corresponds to their absolute luminosity (their 60-watt-ness, so to speak). The Key Project wound up essentially splitting the difference between the two earlier values: 72 ± 8.

The next purely astronomical search for the constant was carried out by SH0ES (Supernovae, H0, for the Equation of State of Dark Energy), a team led by Adam G. Riess, who in 2011 shared the Nobel Prize in Physics for his role in the 1998 discovery of acceleration. This time the standard candles were both Cepheids and type Ia supernovae, and the latter included some of the most distant supernovae ever observed. The initial result, in 2005, was 73 ± 4, nearly identical to the Key Project’s but with a narrower margin of error. Since then, SH0ES has provided regular updates, all of them falling within the same range of ever narrowing error. The most recent, in 2019, was 74.03 ± 1.42.

All these determinations of H0 involve the traditional approach of astronomy: starting in the here and now, the realm that cosmologists call the late universe, and peering farther and farther across space, which is to say (because the velocity of light is finite) further and further back in time, as far as they can see. In the past couple of decades, however, researchers have also begun using the opposite approach. They begin at a point as far away as they can see and work their way forward to the present. The cutoff point—the curtain between what we can and cannot see, between the “early” and the “late” universe—is the same CMB that the astronomers using the Bell Labs radio antenna first observed in the 1960s.

The CMB is relic radiation from the period when the universe, at the young age of 379,000 years old, had cooled enough for hydrogen atoms to form, dissipating the dense fog of free protons and electrons and making enough room for photons of light to travel through the universe. Although the first Bell Labs image of the CMB was a smooth expanse, theorists assumed that at a higher resolution, the background radiation would reveal variations in temperature representing the seeds of density that would evolve into the structure of the universe as we know it—galaxies, clusters of galaxies and superclusters of galaxies.

In 1992 the first space probe of the CMB, the Cosmic Background Explorer, found those signature variations; in 2003 a follow-up space probe, the Wilkinson Microwave Anisotropy Probe (WMAP), provided far higher resolution—high enough that physicists could identify the size of primitive sound waves made by primitive matter. As you might expect from sound waves that have been traveling at nearly the speed of light for 379,000 years, the “spots” in the CMB share a common radius of about 379,000 light-years. And because those spots grew into the universe we study today, cosmologists can use that initial size as a “standard ruler” with which to measure the growth and expansion of the large-scale structure to the present day. Those measures, in turn, reveal the rate of the expansion—the Hubble constant.

The first measurement of H0 from WMAP, in 2003, was 72 ± 5. Perfect. The number exactly matched the Key Project’s result, with the additional benefit of a narrower error range. Further results from WMAP were slightly lower: 73 in 2007, 72 in 2009, 70 in 2011. No problem, though: the error for the SH0ES and WMAP measurements still overlapped in the 72-to-73 range.

By 2013, however, the two margins were barely kissing. The most recent result from SH0ES at that time showed a Hubble constant of 74 ± 2, and WMAP’s final result showed a Hubble constant of 70 ± 2. Even so, not to worry. The two methods could agree on 72. Surely one method’s results would begin to trend toward the other’s as methodology and technology improved—perhaps as soon as the first data were released from the Planck space observatory, the European Space Agency’s successor to WMAP.

That release came in 2014: 67.4 ± 1.4. The error ranges no longer overlapped—not even close. And subsequent data released from Planck have proved just as unyielding as SH0ES’s. The Planck value for the Hubble constant has stayed at 67, and the margin of error shrank to one and then, in 2018, a fraction of one.

“Tension” is the scientific term of art for such a situation, as in the title of a conference at the Kavli Institute for Theoretical Physics (KITP) in Santa Barbara, Calif., last summer: “Tensions between the Early and the Late Universe.” The first speaker was Riess, and at the end of his talk he turned to another Nobel laureate in the auditorium, David Gross, a particle physicist and a former director of KITP, and asked him what he thought: Do we have a “tension,” or do we have a “problem”?

Gross cautioned that such distinctions are “arbitrary.” Then he said, “But yeah, I think you could call it a problem.” Twenty minutes later, at the close of the Q and A, he amended his assessment. In particle physics, he said, “we wouldn’t call it a tension or a problem but rather a crisis.”

“Okay,” Riess said, wrapping up the discussion. “Then we’re in crisis, everybody.”

Unlike a tension, which requires a resolution, or a problem, which requires a solution, a crisis requires something more—a wholesale rethink. But of what? The investigators of the Hubble constant see three possibilities.

One is that something is wrong in the research into the late universe. A cosmic “distance ladder” stretching farther and farther across the universe is only as sturdy as its rungs—the standard candles. As in any scientific observation, systematic errors are part of the equation.

This possibility roiled the KITP conference. A group led by Wendy L. Freedman, an astrophysicist now at the University of Chicago who had been a principal investigator on the Key Project, dropped a paper in the middle of the conference that announced a contrarian result. By using yet another kind of standard candle—stars called red giants that, on the verge of extinction, undergo a “helium flash” that reliably indicates their luminosity—Freedman and her colleagues had arrived at a value that, as their paper said, “sits midway in the range defined by the current Hubble tension”: 69.8 ± 0.8—a result that offers no reassuring margin-of-error overlap with that from either SH0ES or Planck.

The timing of the paper seemed provocative to at least some of the other late universe researchers in attendance. The SH0ES team in particular had little opportunity to digest the data (which the scientists tried to do over dinner that evening), let alone figure out how to respond.

A mere three weeks later, though, they posted a response paper. The method that Freedman’s team used “is a promising standard candle for measuring extragalactic distances,” the authors began, diplomatically, before eviscerating the systematic errors they believed affected the team’s results. Riess and his colleagues’ preferred interpretation of the red giant data restored the Hubble constant to a value well within its previous confines: 72.4 ± 1.9.

Freedman vehemently disagrees with that interpretation: “It’s wrong! It’s completely wrong!” she says. “They have misunderstood the method, although we have explained it to them at several meetings.”

(In early October 2019, at yet another “tension” meeting, the dispute took a personal turn when Barry Madore—one of Freedman’s collaborators, as well as her spouse—showed a slide that depicted Riess’s head in a guillotine. The image was part of a science-related chopping-block metaphor, and Madore later said that including Riess’s head was a joke. But Riess was in the audience; suffice to say that the next coffee break included, at the insistence of many of the attendees, a discussion about professional codes of conduct.)

Such squabbles cannot help but leave particle physicists figuring that, yes, the problem lies with the astronomers and the errors involving the distance ladder method. But CMB observations and the cosmic ruler must come with their own potential for systematic errors, right? In principle, yes. But few (if any) astronomers think the problem lies with the Planck observatory, which physicists believe to have reached the precision threshold for space observations of the CMB. In other words, Planck’s measurements of the CMB are probably as good as they are ever going to get. “The data are spectacular,” says Nicholas Suntzeff, a Texas A&M astronomer who has collaborated with both Freedman and Riess, though not on the Hubble constant. “And independent observations” of the CMB—at the South Pole Telescope and the Atacama Large Millimeter Array—“show there are no errors.”

If the source of the Hubble tension is not in the observations of either the late universe or the early universe, then cosmologists have little choice but to pursue option three: “new physics.”

For nearly a century now scientists have been talking about new physics—forces or phenomena that would fall outside our current knowledge of the universe. A decade after Albert Einstein introduced his general theory of relativity in 1915, the advent of quantum mechanics compromised its completeness. The universe of the very large (the one operating according to the rules of general relativity) proved to be mathematically incompatible with the universe of the very small (the one operating according to the rules of quantum mechanics).

For a while physicists could disregard the problem, as the two realms did not intersect on a practical level. But then came the discovery of the CMB, validating the idea that the universe of the very large actually emerged from the universe of the very small—that the large-scale galaxies and clusters we study with the help of general relativity grew out of quantum fluctuations. The Hubble tension arises directly out of an attempt to match those two types of physics. The quantum fluctuations in the CMB predict that the universe will mature with one value of the Hubble constant, whereas the general relativistic observations being made today are revealing another value.

Riess likens the discrepancy to a person’s growth. “You’ve got a child, and you can measure their height very precisely when they’re two years old,” he says. “And you can then use your understanding of how people grow, like a growth chart, to predict their final height at the end.” Ideally the prediction and measurement would agree. “In this case,” he says, “they don’t.” Then again, he adds, “We don’t have a growth chart for how universes usually grow.”

And so cosmologists have begun entertaining the radical—yet not altogether unpalatable—possibility that the standard cosmological model is not as complete as they have assumed it to be.

One possible factor affecting our understanding of the universe’s growth is an uncertainty about the particle census of the universe. Most scientists today are old enough to remember another imbalance between observation and theory: the “solar neutrino problem,” a decades-long dispute about electron neutrinos from the sun. Theorists predicted one amount; neutrino detectors indicated another. Physicists suspected systematic errors in the observations. Astronomers questioned the completeness of the theory. As with the Hubble constant tension, neither side budged—until the end of the millennium, when researchers discovered that neutrinos, unexpectedly, have mass; theorists adjusted the Standard Model of particle physics accordingly. A similar adjustment now—for instance, a new variety of neutrino in the early universe—might alter the distribution of mass and energy just enough to account for the differences in measurement.

Another possible explanation is that the influence of dark energy changes over time—a reasonable alternative, considering that cosmologists do not know how dark energy works, let alone what it is.

“There is a small correction somewhere needed to bring the numbers into agreement,” Suntzeff says. “That is new physics, and that is what excites cosmologists—a kink in the wall of the Standard Model, something new to work on.”

Everybody knows what they have to do next. Observers will await data from Gaia, a European Space Agency observatory that promises, in the next couple of years, unprecedented precision in the measurement of distances to more than a billion stars in our galaxy. If those measurements do not match the values that astronomers have been using as the first rung in the distance ladder, then maybe the problem will have been systematic errors after all. Theorists, meanwhile, will continue to churn out alternative interpretations of the universe. So far, though, they have not found one that withstands community scrutiny. And there, barring any breakthrough, the tension—problem, crisis—will have to reside for now: in a quasi-unscientific universe harboring a predicted Hubble constant of 67 that belies the observation of 74.

The standard cosmological model remains one of the great scientific triumphs of the age. In half a century cosmology has matured from speculation to (near) certainty. It might not be as complete as cosmologists believed it to be even a year ago, yet it remains a textbook example of how science works at its best: it raises questions, it provides answers and it hints at mystery.